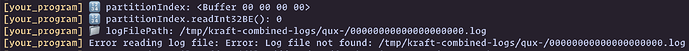

Error reading log file: Error: Log file not found: /tmp/kraft-combined-logs/pax-0.log

remote: [your_program] at readFromFileBuffer (file:///app/app/fetch_api_request.js:106:19)

remote: [your_program] at file:///app/app/fetch_api_request.js:58:15

remote: [your_program] at Array.map ()

remote: [your_program] at handleFetchApiRequest (file:///app/app/fetch_api_request.js:24:8)

remote: [your_program] at Socket. (file:///app/app/main.js:28:7)

remote: [your_program] at Socket.emit (node:events:519:28)

remote: [your_program] at addChunk (node:internal/streams/readable:559:12)

remote: [your_program] at readableAddChunkPushByteMode (node:internal/streams/readable:510:3)

remote: [your_program] at Readable.push (node:internal/streams/readable:390:5)

remote: [your_program] at TCP.onStreamRead (node:internal/stream_base_commons:190:23)

remote: [your_program] recordBatch { messages: , nextOffset: 0 }

remote: [your_program] node:buffer:587

remote: [your_program] throw new ERR_INVALID_ARG_TYPE(

remote: [your_program] ^

remote: [your_program]

remote: [your_program] TypeError [ERR_INVALID_ARG_TYPE]: The “list[8]” argument must be an instance of Buffer or Uint8Array. Received an instance of Object

remote: [your_program] at Function.concat (node:buffer:587:13)

remote: [your_program] at file:///app/app/fetch_api_request.js:60:45

remote: [your_program] at Array.map ()

remote: [your_program] at handleFetchApiRequest (file:///app/app/fetch_api_request.js:24:8)

remote: [your_program] at Socket. (file:///app/app/main.js:28:7)

remote: [your_program] at Socket.emit (node:events:519:28)

remote: [your_program] at addChunk (node:internal/streams/readable:559:12)

remote: [your_program] at readableAddChunkPushByteMode (node:internal/streams/readable:510:3)

remote: [your_program] at Readable.push (node:internal/streams/readable:390:5)

remote: [your_program] at TCP.onStreamRead (node:internal/stream_base_commons:190:23) {

remote: [your_program] code: ‘ERR_INVALID_ARG_TYPE’

remote: [your_program] }

remote: [your_program]

remote: [your_program] Node.js v21.7.3

remote: [tester::#FD8] EOF

remote: [tester::#FD8] Test failed

unable to read the metadata file

what could be the issue